We introduce AgriNav-Sim2Real, a large-scale dataset for greenhouse navigation that bridges synthetic (Unreal Engine + AirSim) and real (handheld/UGV/drone) captures.

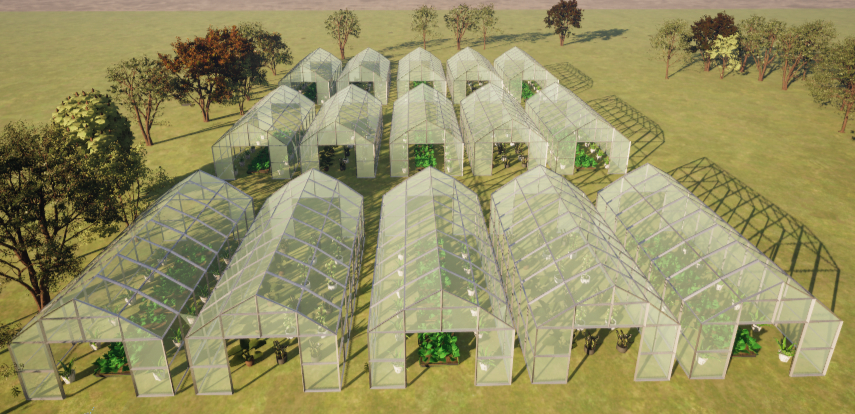

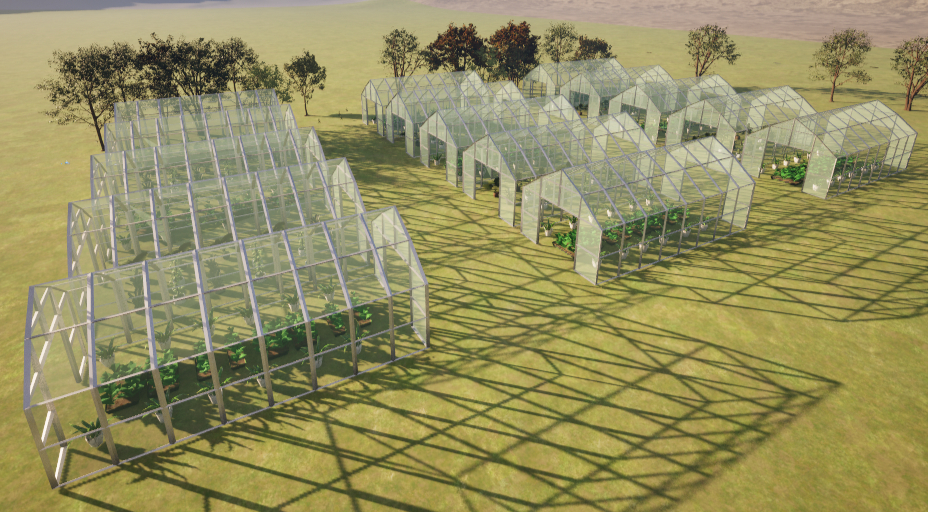

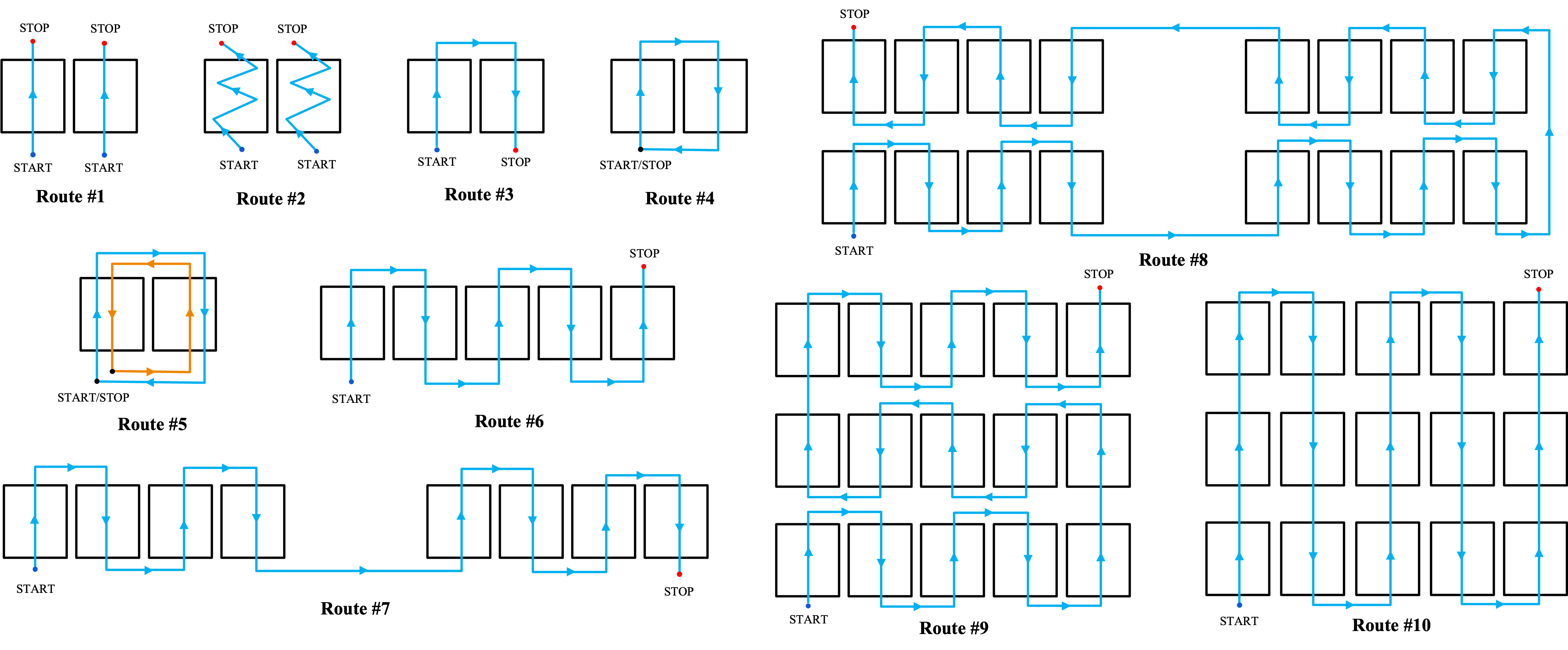

The synthetic split provides RGB, Depth, Semantic Segmentation, LiDAR, IMU, and GPS/pose data in a 3×5 connected-greenhouse with 10 canonical routes (loop, straight, zig-zag, in/out), rendered at a resolution of 960×540 px.

The real split offers ZED2i RGB-D, Alvium NIR, and IMU recordings across multiple farm sessions, with optional Insta360 360° context, captured at 3840×2160 px resolution.

In total, the dataset contains 847,243 files (15 videos, 674.3 GB), organized with timestamp-based filenames shared across modalities for straightforward synchronization and consistent folder layout for loaders and baselines.

We also establish a benchmark suite evaluating recent methods in object detection and semantic segmentation (e.g., YOLOv8, Mask R-CNN, SegFormer) to assess sim-to-real generalization across multi-sensor inputs (RGB, Depth, NIR).

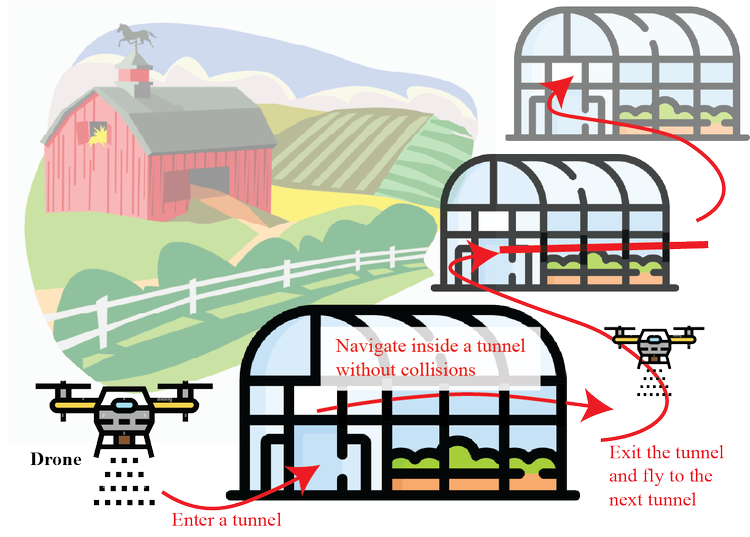

AgriNav-Sim2Real targets tasks in navigation and obstacle avoidance, depth estimation, semantic segmentation, cross-modal fusion (RGB-D/NIR), and sim-to-real transfer (train synthetic → evaluate real).

Dataset Highlights

Synthetic Dataset Highlight✨

Modalities: RGB, Depth (PFM), Semantic Segmentation (uint8 masks), LiDAR (ASCII), IMU, GPS/pose.

Environment: 3×5 connected greenhouses with dynamic lighting, wind, clutter, and 10 route classes

(Route #1–#10) rendered via Unreal Engine + AirSim.

Organization: Per-route folder structure with timestamp-based filenames shared across modalities;

optional scenes/ (for domain randomization) and routes/ (for waypoints).

Use Cases: Synthetic data pretraining, sensor fusion benchmarking, 3D reconstruction,

and sim-to-real transfer learning.

Real Dataset Highlight✨

Modalities: ZED2i RGB-D + IMU; Alvium 1800 U-501 NIR; (FLIR Lepton LWIR capable but not in this release);

Insta360 X3 for 360° context.

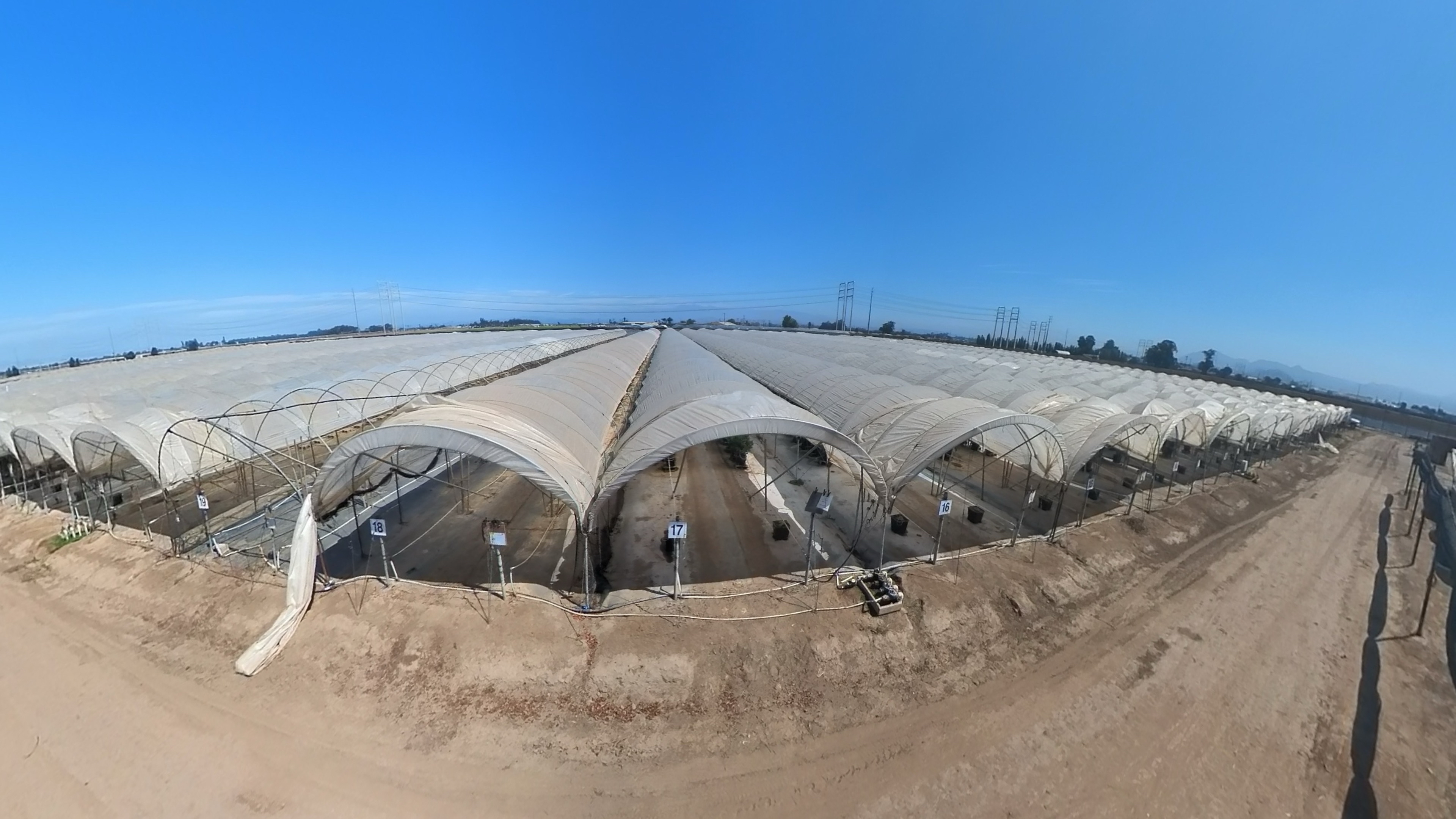

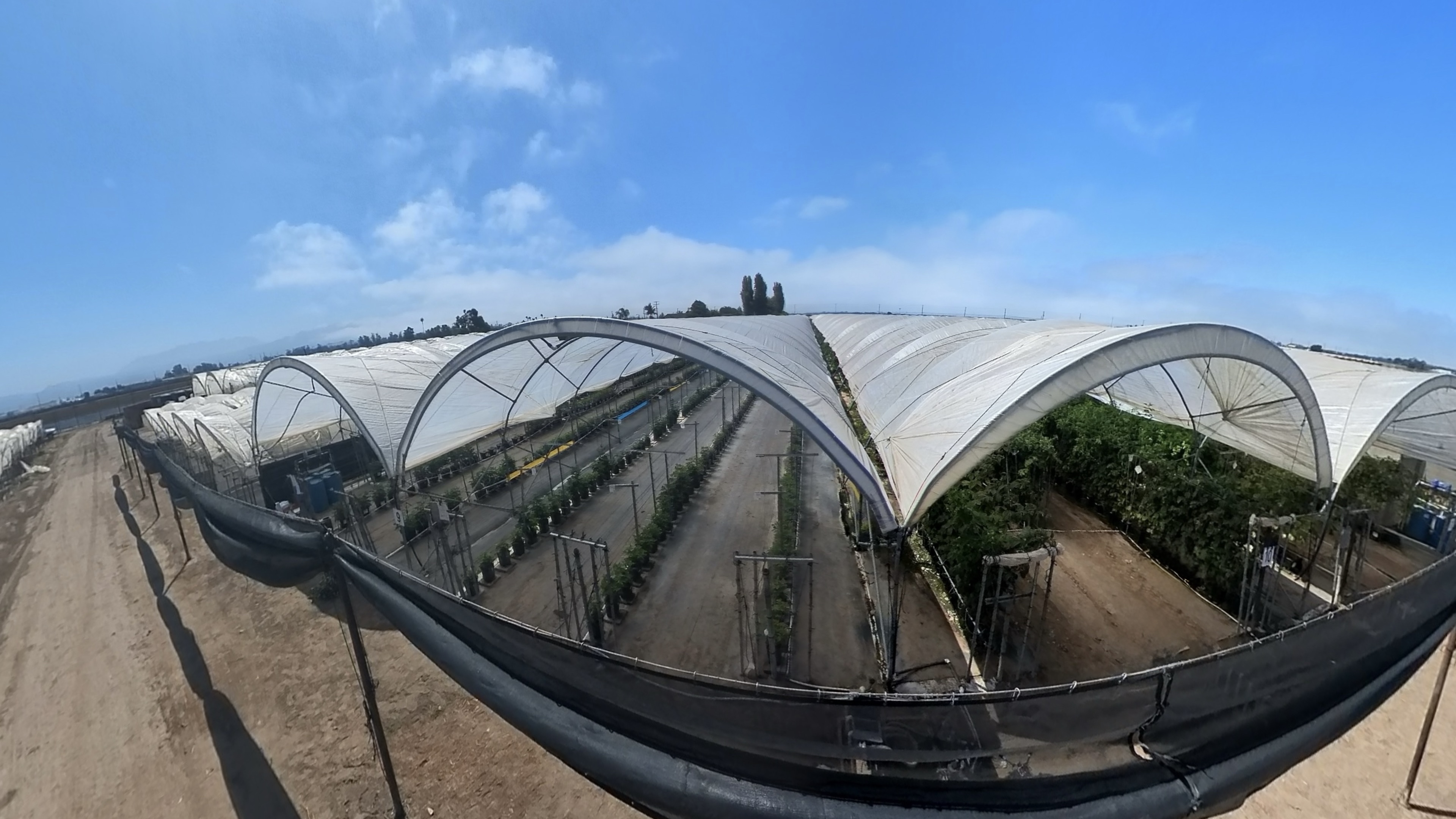

Environment: Field recordings from commercial greenhouses and open-farm setups under varying lighting,

weather, and plant species (strawberries, blueberries, blackberries).

Organization: Per-session folders organized by timestamp and sensor type (e.g., nir/, imu/),

synchronized for multimodal processing.

Use Cases: Real-world validation of trained navigation policies, depth estimation, and

cross-modal RGB↔NIR domain adaptation.